Week 9 - 11

Week 9 - 11, I have decided to work on my 2 experiments as I mentioned earlier. In my first experiment, I want to make my 3D scan more accurate. I will look into NeRF to render images into 3D model. For my second experiment, I will look into creating Metaverse and also importing asset. By using Spatial and Unity to visualise the digital space.

Experiment 1: Bringing physical object to digital space

Instant NeRF by NVIDIA

NeRF by NVIDIA research that turn 2D Photos Into 3D Scenes by using AI. Instant NeRF is a neural rendering model that learns a high-resolution 3D scene in seconds — and can render images of that scene in a few milliseconds. NVIDIA applied this approach to a popular new technology called neural radiance fields, or NeRF. The model requires just seconds to train on a few dozen still photos. NeRFs use neural networks to represent and render realistic 3D scenes based on an input collection of 2D images.

Questions to find out:

• How to train NeRF?

• How fast to train NeRF

• How accurate will the 3D model be?

For this experiment, I downloaded it from Github. As it uses Python script and AI generator, I took quite some time to figure out how to use it and download it.

How it works?

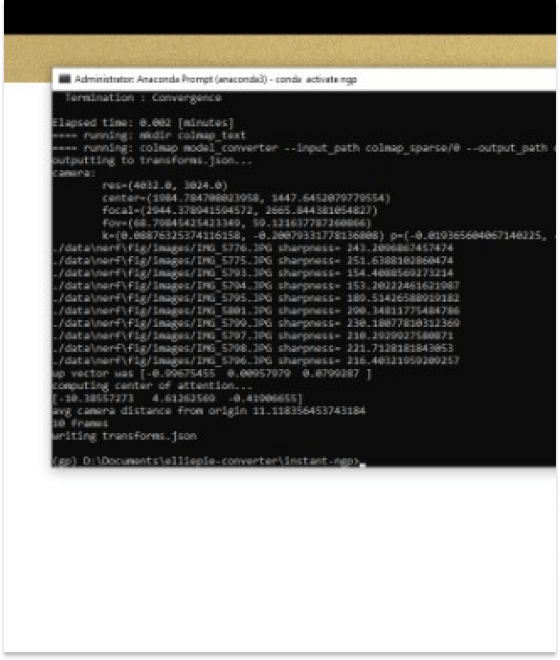

After downloading the beta test from Github, I need to use Anaconda and PowerShell to run it. The script must activate instant NeRF and generate json files that can be run in the beta test to generate 3D models.

To generate json file using Anaconda Prompt (anaconda3 software)

• cd/ (location of the file from C/D drive)

• cd ngp

• cd instant-ngp

• conda activate ngp (activate the key)

• python scripts/colmap2nerf.py --colmap_matcher exhaustive --run_colmap --aabb_scale 16 --images data/playground (location of the file)

• D:\Documents\elliepie-converter\instant-ngp\build\testbed.exe --scene data/nerf/fig (Render application)

Generating 3D model

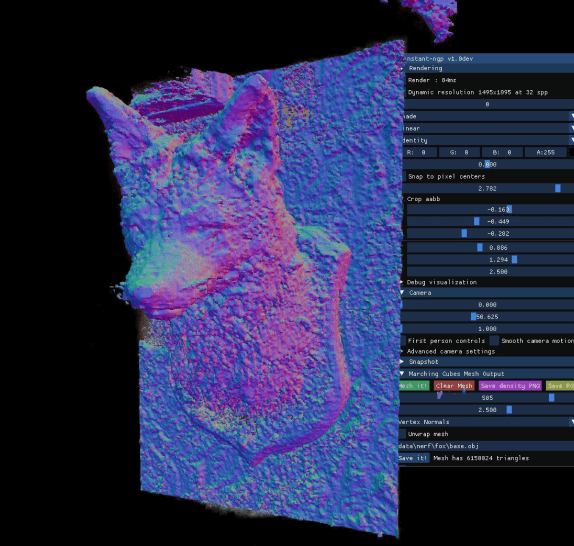

A 3D model will be generated by NeRF based on the images you provide. After launching the beta test, NeRF was trained to build a 3D model by running the script with the json file. On the left side is the training NeRF where the images will be processed and any changes, such as editing the 3D model, can be made.

Insights

I encounter some challenges when generating the json file for the 3D model. There is an issue with some of my photos being rejected by the json file. If they accepted the photo, the 3D model could be more accurate, but maybe they couldn't detect it. 21/50 images were accepted, while the rest were rejected. The back of the 3D model is still accurate, while the front is blurry. The model could also be too small, making it harder for the AI to detect.

Qlone

As before, I used Qlone to scan physical collectibles and create 3D objects from them. In comparison to other software, I feel that this app is the most friendly. In the app, there is an AR guide for me to follow with my phone so that I can scan the objects properly. The phone is also being supported by a tripod while being scanned on a turntable to improve accuracy.

Experiment 2: Uploading digital asset into the Metaverse

In this section I will discuss how I created a demo of a metaverse/digital space where avatars can move and upload 3D assets. I find it interesting to create the kind of environment I envision and to upload 3D scanned objects. From ideation to creation using sketchup pro and Enscape software.

Spatial

Spatial.io is a virtual meeting space. You can use the VR headsets as Spatial supports Meta Quest VR device Computer Spatial's Mobile App on iOS and Android. Designers use Spatial by importing their 3D model of the environment and showcasing their work in the designed space. As part of the demo, I will upload my 3D scans and create a customisable space using spatial.

Building tools

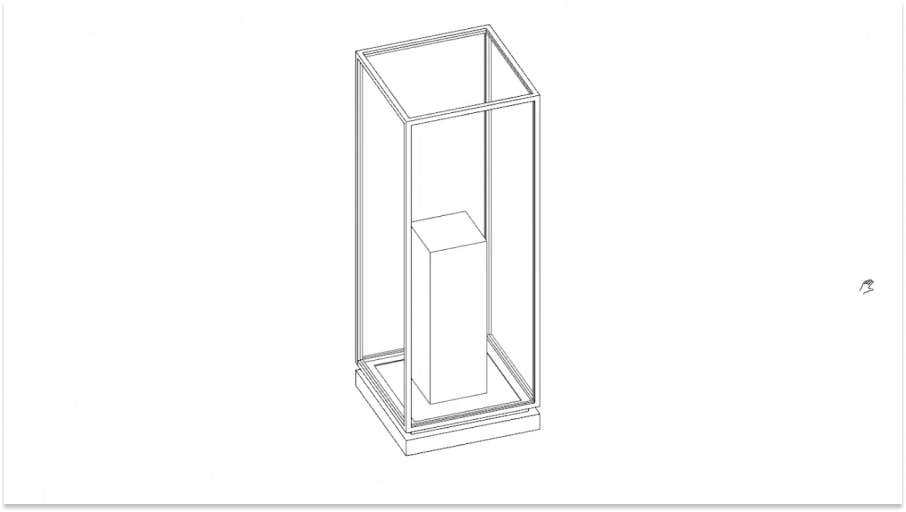

Spatial allows me to create my own space. I imported a free 3D model of a gallery into Spatial. Additionally, I included my 3D scanned objects from Qlone. It was exported as .GLB because it includes texture when I imported it. .OBJ and other formats are supported, but textures are separated instead. Therefore, .GLB is the best format. 3D Objects exported from Qlone have a good size and don't require additional steps to lower their resolution.

Customised space

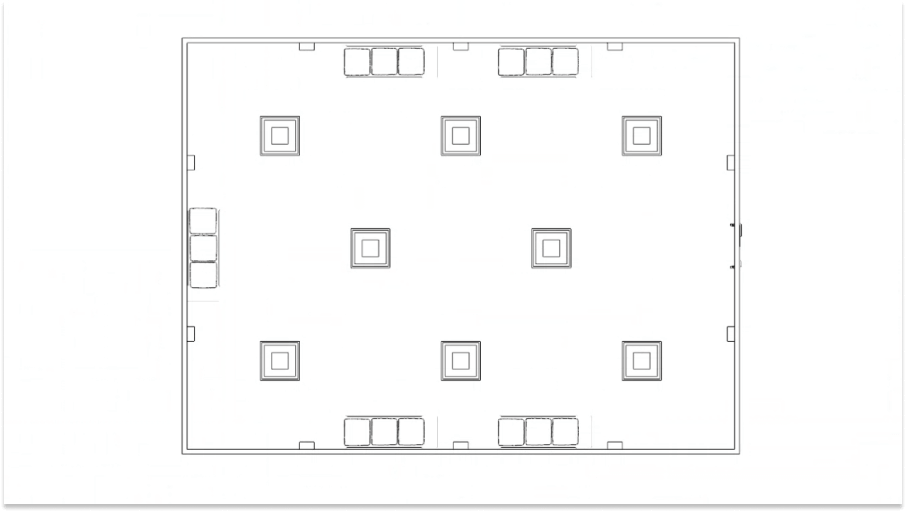

The goal of my project is to create a digital marketplace. I envision it as a gallery where people can buy, sell, and trade. I want to design an interior space where collectibles can also be displayed. It is important to me that the design direction be minimalistic, simple, elegant, and gallery-like. Some important requirements need to be put in the space, such as figurines on top of platforms. First, start with eight figurines. The space will be designed using sketchup and Enscape.

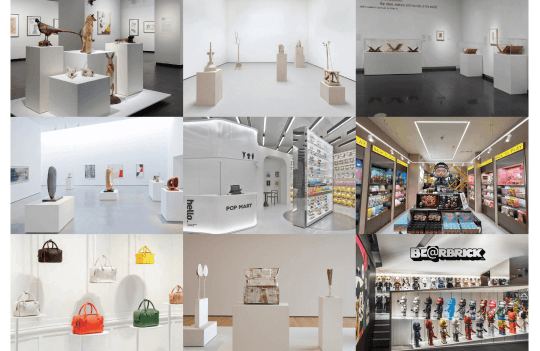

Moodboard

The goal of my project is to create a digital marketplace. I envision it as a gallery where people can buy, sell, and trade. I want to design an interior space where collectibles can also be displayed. It is important to me that the design direction be minimalistic, simple, elegant, and gallery-like. Some important requirements need to be put in the space, such as figurines on top of platforms. First, start with eight figurines. The space will be designed using sketchup and Enscape.

Rendered design

Using the sketchup pro, I've designed a gallery-looking space with 8 pedestals to display collectibles, similar to a real-life scenario. I like the colour that I used in the space, minimalistic and modernised. A sofa was placed at each corner so people could sit and rest there. As part of the interior design, I used plants as decorative elements.

Proposed Design

In this experiment, we explore the possibilities of a different marketplace environment for collectibles. Two types of spaces interest me: gallery-like spaces that mimic real life and abstract ones. User testing has been conducted to determine which one is the best, and round table discussions have been conducted to get feedback.

Development 1

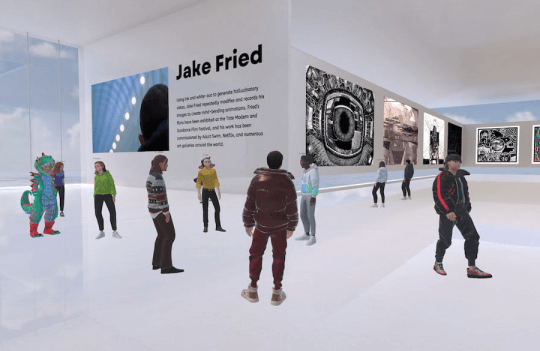

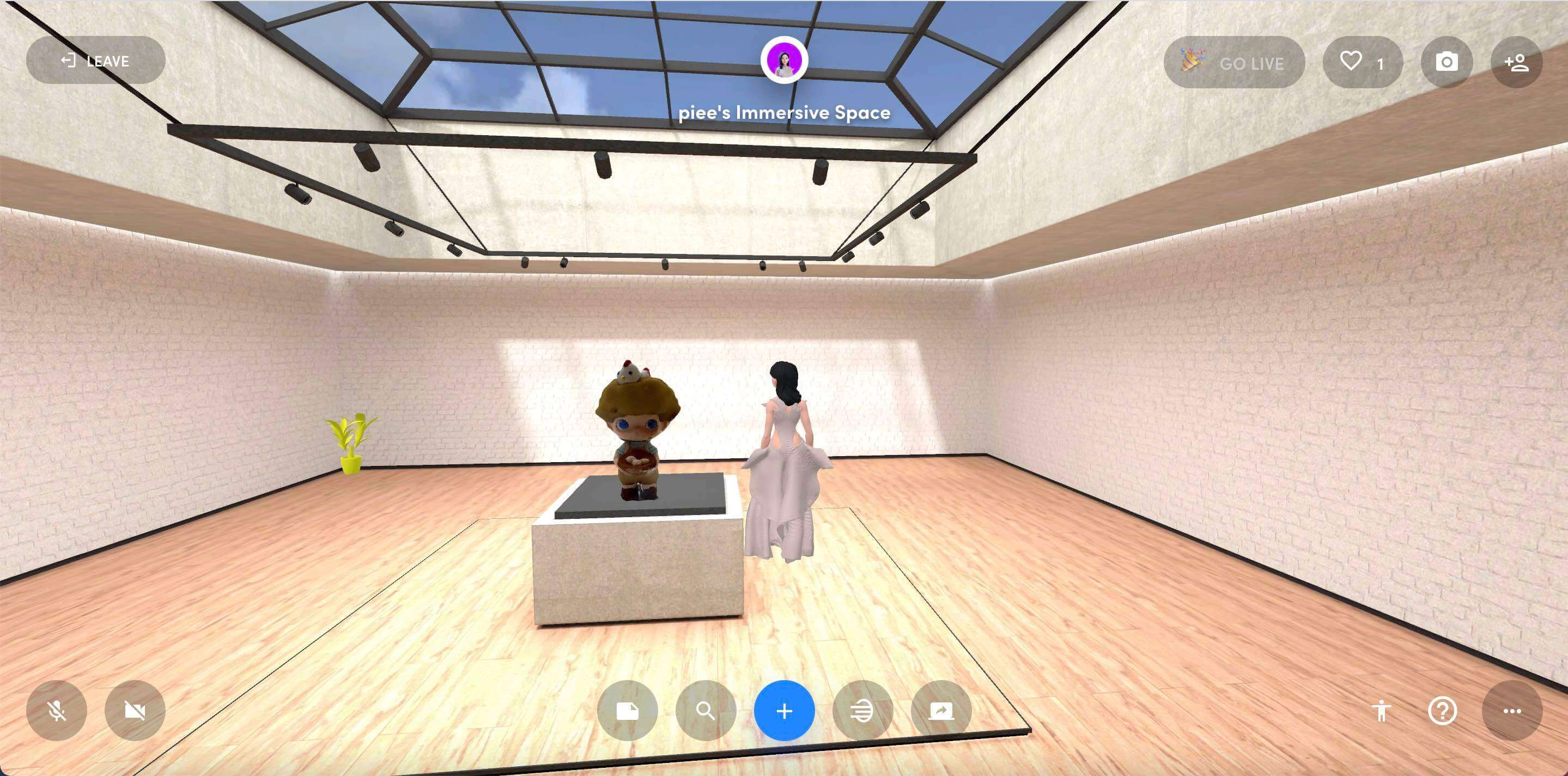

For my first development, I tested Spatial to learn how to use it. First I experimented with a simple layout. In this space, I invite my friends to interact with each other so that we can see how this space can be used. I find it interesting because it helps me visualize

Development 2

For my second development, I want to test it out with a virtual model I created using SketchUp. Models are exported in GLB format to make them compatible with spatial platforms. The space has been enlarged to the avatar and you can also move around and view the collectibles inside the pedestal. To see the space, I will upload the full video in the coming weeks.

Development 3

For my third development, I wanted this space to have some fun elements as compared to the second developement. To increase interaction, I imported the 3D model and animate it to move around the room.

Reflection & feedback

I received feedback from Joselyn and Vikas during the roundtable discussion. In their opinion, the second development is more fun and interactive because it is more engaging. He says my project is straightforward, and glad to see it take shape. They also mentioned that it is a good starting point and perhaps the layout can be expanded instead of square. They also suggested focusing on the layout of the metaverse more than scanning the 3D objects. I will continue to work on Spatial space and will also explore Unity apps because they allow more customization, but require more time to develop.